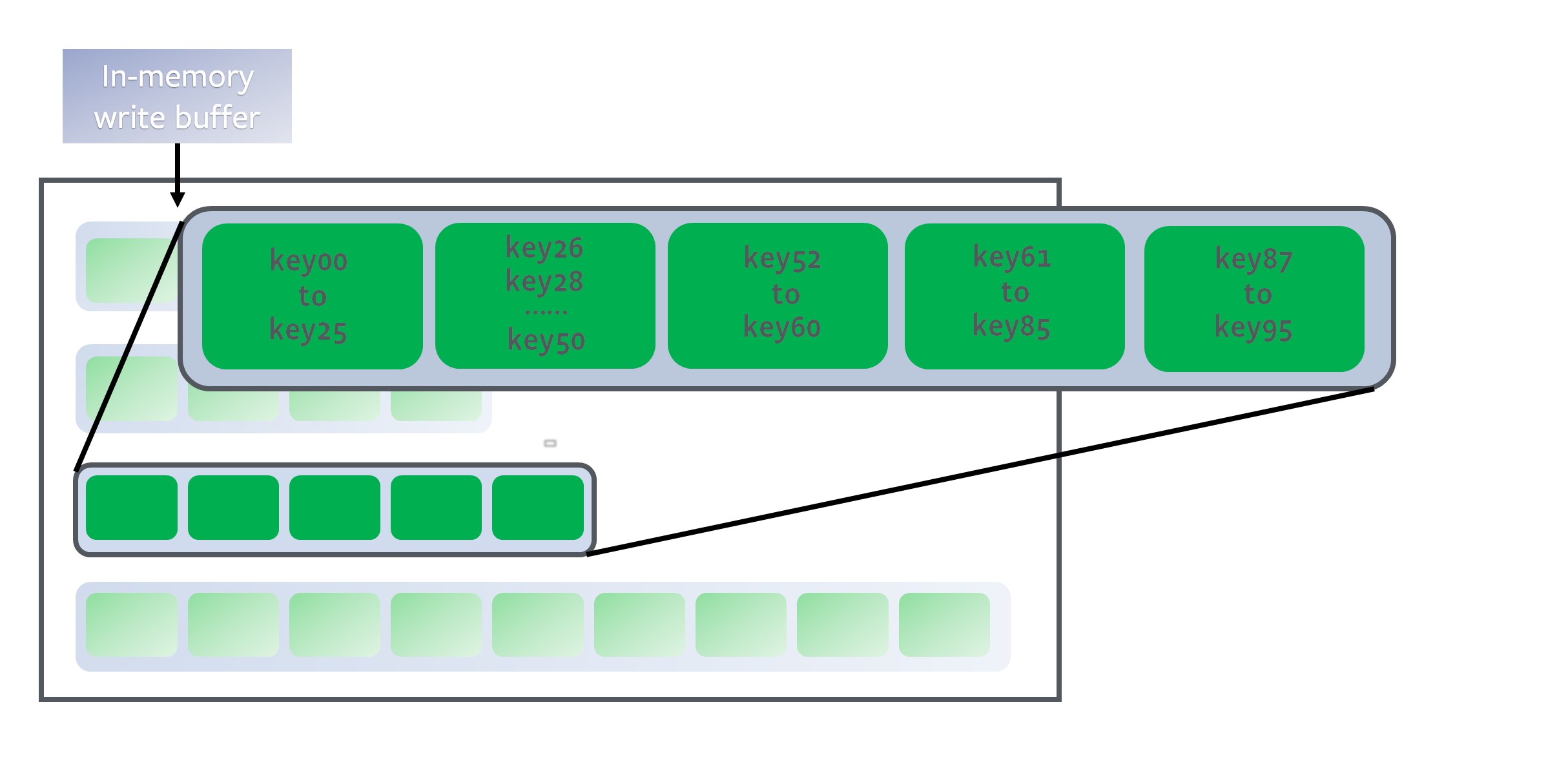

Inside each level (except level 0), data is range partitioned into multiple SST files:

The level is a sorted run because keys in each SST file are sorted (See as an example). To identify a position for a key, we first binary search the start/end key of all files to identify which file possibly contains the key, and then binary search inside the key to locate the exact position. In all, it is a full binary search across all the keys in the level.

All non-0 levels have target sizes. Compaction's goal will be to restrict data size of those levels to be under the target. The size targets are usually exponentially increasing:

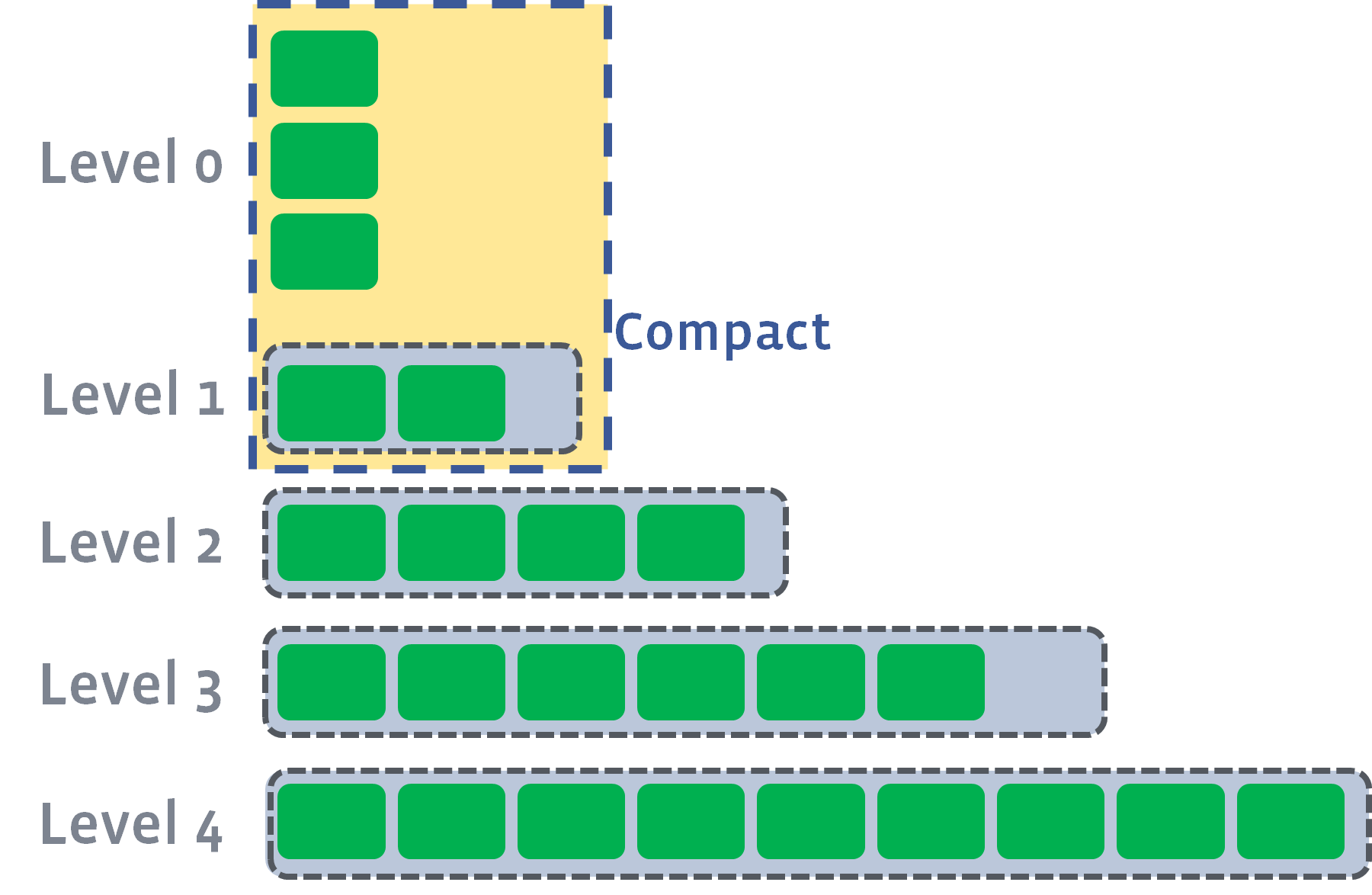

Compaction triggers when number of L0 files reaches , files of L0 will be merged into L1. Normally we have to pick up all the L0 files because they usually are overlapping:

After the compaction, it may push the size of L1 to exceed its target:

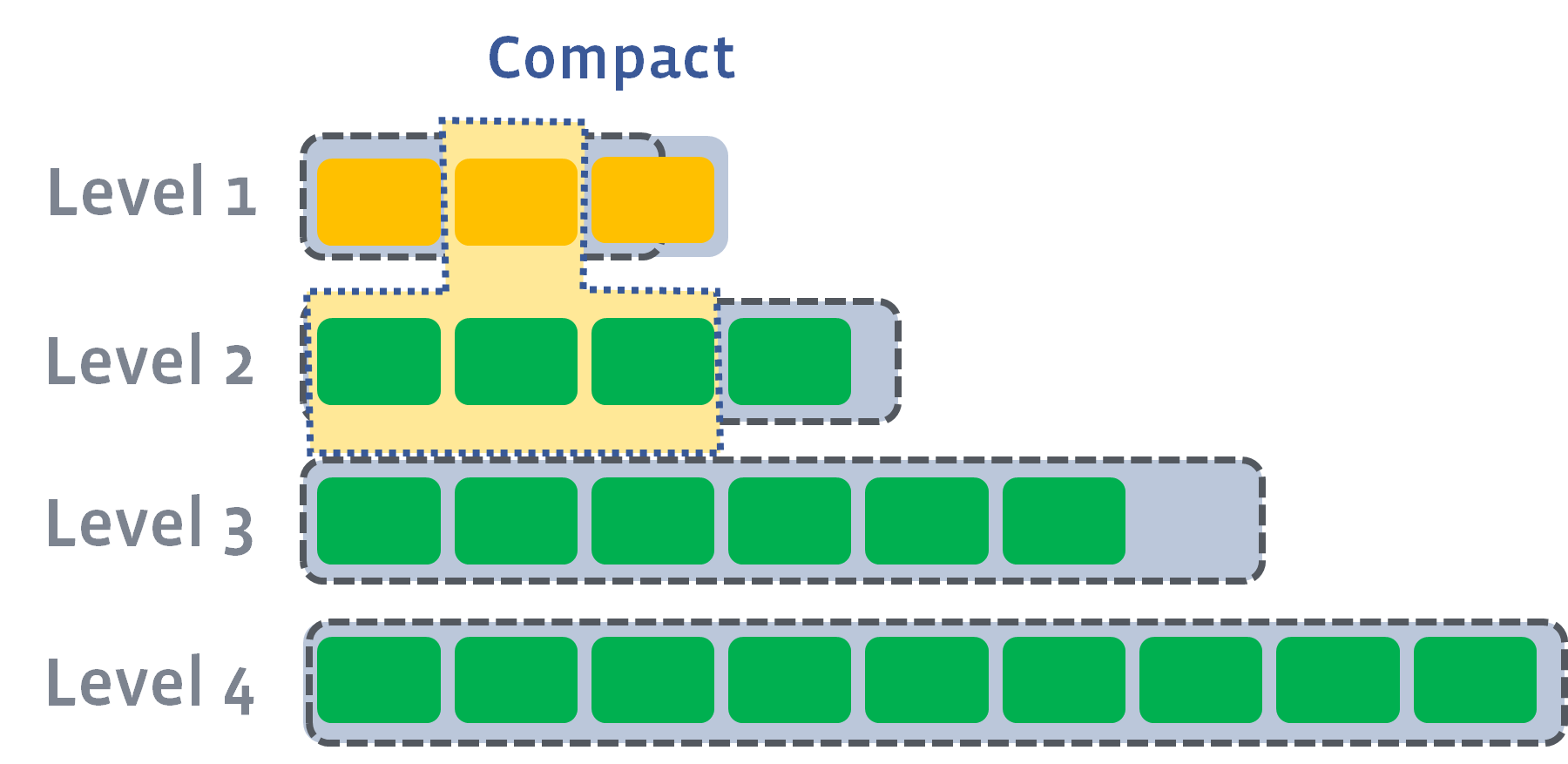

In this case, we will pick at least one file and merge it with the overlapping range of L2. The result files will be placed in L2:

and then

and then

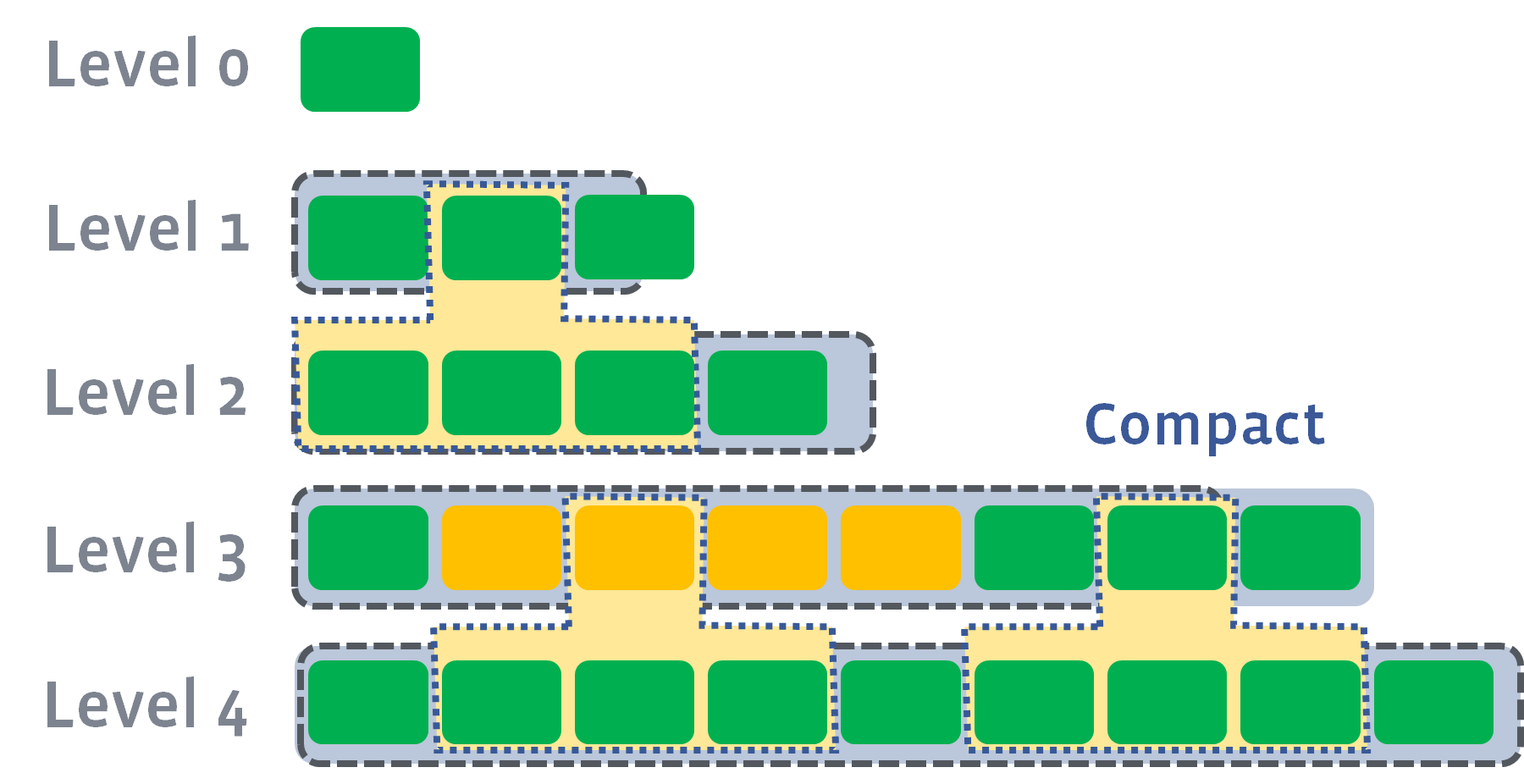

Multiple compactions can be executed in parallel if needed:

Maximum number of compactions allowed is controlled by max_background_compactions.

However, L0 to L1 compaction cannot be parallelized. In some cases, it may become a bottleneck that limit the total compaction speed. In this case, users can set max_subcompactions to more than 1. In this case, we'll try to partition the range and use multiple threads to execute it:

When multiple levels trigger the compaction condition, RocksDB needs to pick which level to compact first. A score is generated for each level:

for level-0, the score is the total number of files, divided by

level0_file_num_compaction_trigger, or total size overmax_bytes_for_level_base, which ever is larger. (if the file size is smaller thanlevel0_file_num_compaction_trigger, compaction won't trigger from level 0, no matter how big the score is)

We compare the score of each level, and the level with highest score takes the priority to compact.

Which file(s) to compact from the level are explained in Choose Level Compaction Files.

If level_compaction_dynamic_level_bytes is false, then level targets are determined as following: L1's target will be . And then Target_Size(Ln+1) = Target_Size(Ln) max_bytes_for_level_multiplier max_bytes_for_level_multiplier_additional[n]. max_bytes_for_level_multiplier_additional is by default all 1.

For example, if max_bytes_for_level_base = 16384, max_bytes_for_level_multiplier = 10 and max_bytes_for_level_multiplier_additional is not set, then size of L1, L2, L3 and L4 will be 16384, 163840, 1638400, and 16384000, respectively.

level_compaction_dynamic_level_bytes is true

Target size of the last level (num_levels-1) will always be actual size of the level. And then . We won't fill any level whose target will be lower than max_bytes_for_level_base / max_bytes_for_level_multiplier . These levels will be kept empty and all L0 compaction will skip those levels and directly go to the first level with valid target size.

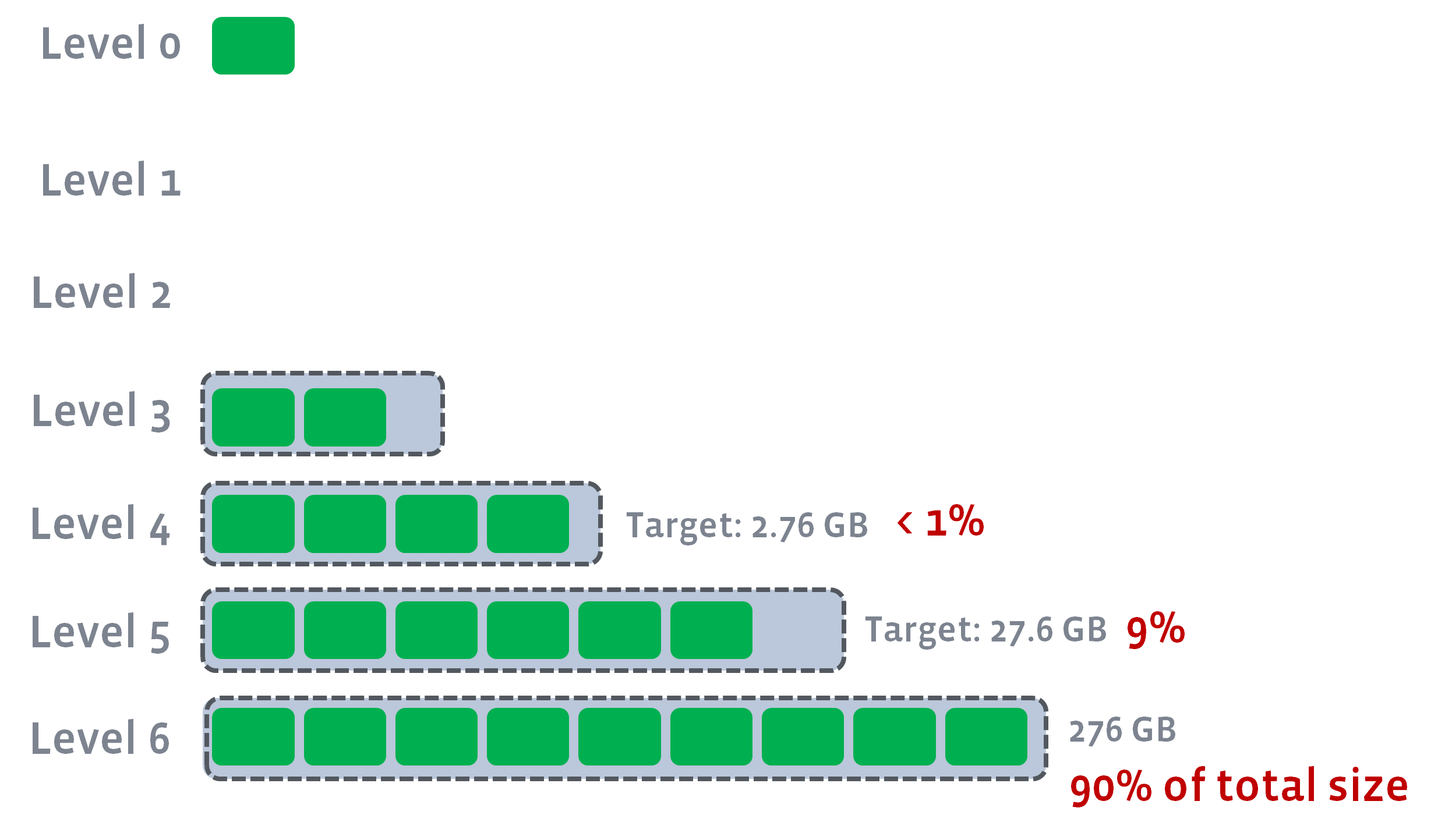

For example, if max_bytes_for_level_base is 1GB, num_levels=6 and the actual size of last level is 276GB, then the target size of L1-L6 will be 0, 0, 0.276GB, 2.76GB, 27.6GB and 276GB, respectively.

This is to guarantee a stable LSM-tree structure, which can't be guaranteed if level_compaction_dynamic_level_bytes is false. For example, in the previous example:

We can guarantee 90% of data is stored in the last level, 9% data in the second last level. There will be multiple benefits to it.

A file could exist in the LSM tree without going through the compaction process for a really long time if there are no updates to the data in the file's key range. For example, in certain use cases, the keys are "soft deleted" — set the values to be empty instead of actually issuing a Delete. There might not be any more writes to this "deleted" key range, and if so, such data could remain in the LSM for a really long time resulting in wasted space.